You might wonder why developers invest time in software architecture when AI-powered tools can generate code so quickly. After all, why not just ship fast and start profiting? Interestingly, this question existed long before AI entered the coding scene. Robert Martin, author of the bestseller Clean Architecture, studied the impact of skipping the fundamentals of software architecture.

At first, rapid coding works well — features are released quickly, and everything seems fine. But once the MVP is live and real users start giving honest (and sometimes not-so-rosy) feedback, things begin to change. To retain your audience, you need to fix bugs quickly while continuing to develop new features. At this stage, poor structure can turn your codebase into a nightmare, harder to maintain than to rewrite from scratch.

The goal of software architecture is to minimize the human resources required to build and maintain the system. Robert C. Martin

However, not every architectural approach fits every product. Understanding the various types of software architecture helps your team choose the right foundation. Let’s start the journey together with DigitalMara and demystify those “complicated” terms your developers use.

Microservices Architecture: break it down to scale it up

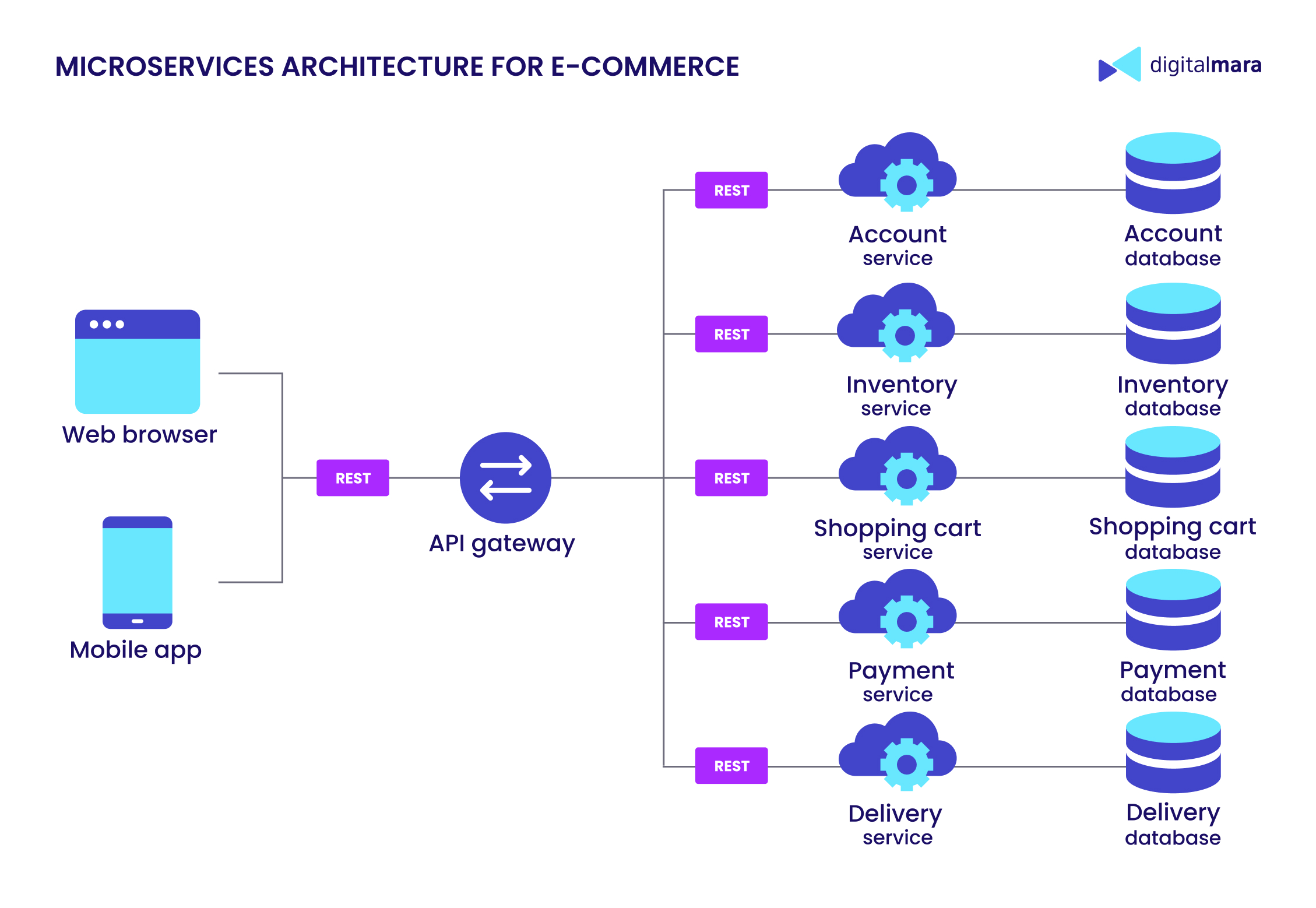

This approach divides the system into small, independent services — each handling a specific task and communicating via APIs. Unlike monolithic apps, where everything is bundled together, microservices let teams develop, deploy, and scale components separately. Each service also handles its own data storage, so there’s no risk of everything getting stuck because of one central database.

Today, microservices architecture is widely considered the gold standard in the industry, especially for complex, cloud-native applications. Essentially a more granular evolution of Service-Oriented Architecture (SOA), it assigns discrete tasks to individual services: one might handle a high-load function, while another manages an entire domain like authentication. This model is particularly well-suited to AI software architecture, where components often require independent scaling.

Here’s how microservices architecture can look in a marketplace setup:

Benefits of microservices architecture best practices:

- Faster development — teams can work in parallel, each focused on their own piece of the system without stepping on anyone’s toes.

- Defined responsibility — each microservice has one team in charge, making accountability and ownership straightforward.

- Scalability — high-load systems benefit from the ability to scale individual services as needed.

- Smooth updates — each microcomponent can be deployed or replaced independently, avoiding downtime for the entire system.

- Right tool for the job — different services can use different languages, frameworks, or databases depending on what fits best (Python for ML, Java for APIs, etc.).

Of course, there are challenges:

- Deployment — in the early stages, deploying microservices can be like using a sledgehammer to crack a nut. Often, it’s smarter to begin with a well-structured monolith and split it into independent parts as needs scale up.

- Data consistency — with each microservice managing its own data, syncing and ensuring consistency become trickier.

- Operations — more services mean more logs, metrics, and monitoring struggles. As the joke goes: “If a monolith fails, the app crashes. If a microservice fails, the app still crashes — but now you’ve got 12 dashboards to debug.” Tools like Kubernetes and a solid DevOps strategy are essential to tame the chaos.

The monolithic vs. microservices architecture debate isn’t about which approach is ‘better’, it’s about what fits your product’s current needs and future goals. Tech giants like Netflix, Spotify, and Uber leverage microservices to scale rapidly, but their journeys almost always began with something simpler.

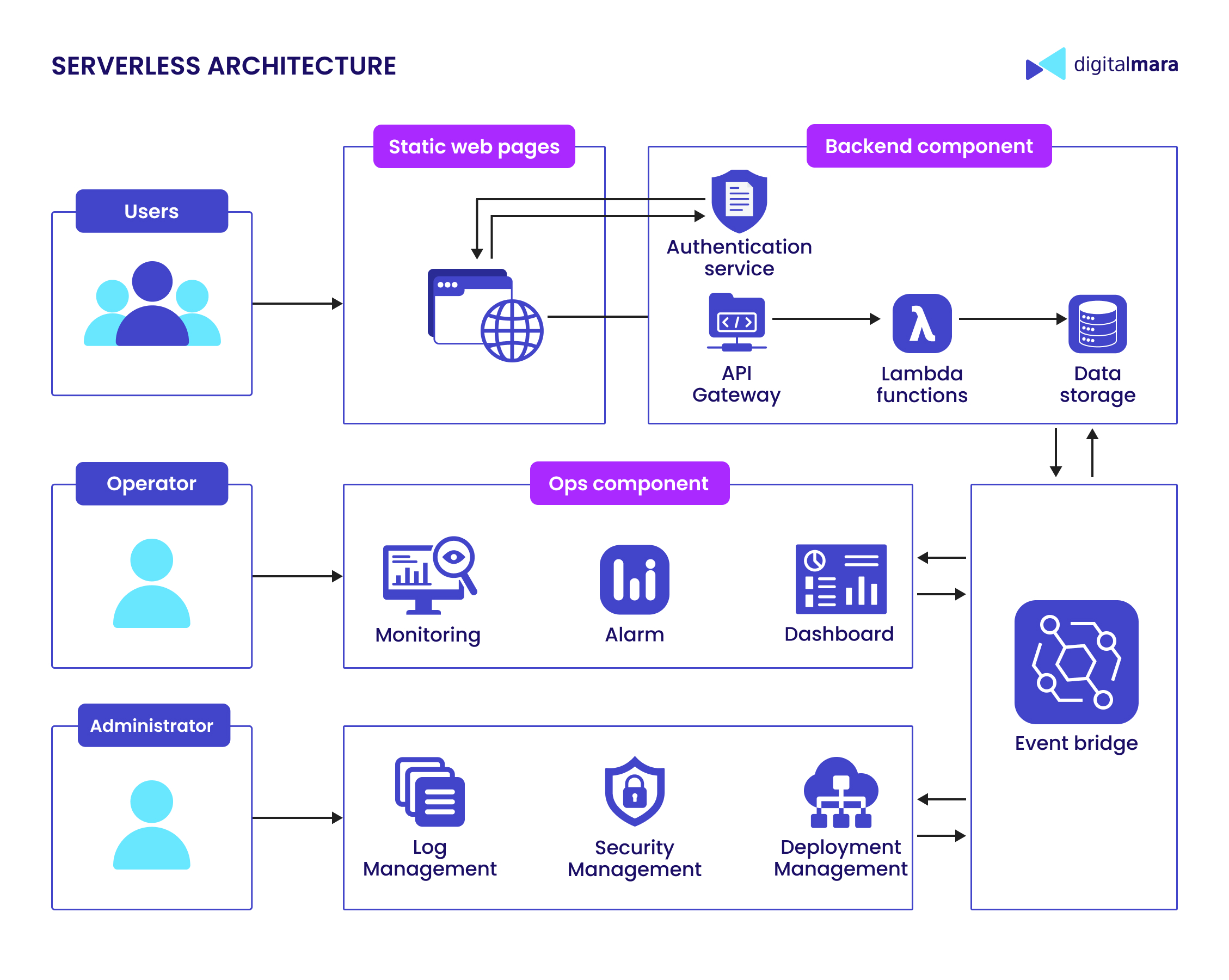

Serverless Architecture: the next step after microservices

Think of serverless as an evolution of enterprise software architecture, it’s all about breaking things apart and letting cloud providers handle the heavy lifting. And yeah, the name “serverless” is kinda funny. There are still servers, just not yours! Cloud platforms offer things like Backend-as-a-Service (BaaS) for databases and ready-made services, or Functions-as-a-Service (FaaS) like AWS Lambda for running code on demand. For AI projects especially, it’s a total game-changer: your system components scale automatically and your costs stay lean. Even more importantly, you can focus on building cool models instead of babysitting servers.

Key serverless benefits are:

- Simplified operations — cloud providers standardize deployment processes, significantly reducing management overhead.

- Serverless cost savings — the pay-per-use model eliminates idle resource expenses, ensuring you only pay for actual consumption.

- Compliance assurance — providers take care of certifications like GDPR and PCI-DSS, so developers don’t have to worry about the legal side of infrastructure.

Of course, these systems have challenges, too. These include:

- Vendor lock-in — dependence on a specific provider may force architectural compromises when platform capabilities fall short. This often pushes teams toward hybrid cloud solutions, adding design complexity.

- Resource cost management — the flexibility of serverless can be a double-edged sword. A small bug in your code could trigger runaway resource usage, and you might only notice when your account gets suspended over a six-figure bill.

- Latency issues — services that aren’t often used may experience delays due to cold starts, where the system takes longer to spin up after being idle.

When weighing up serverless vs. microservices, the big difference lies in who is stuck dealing with infrastructure headaches. With serverless, you just toss your code into the cloud and let it run, no servers to manage. Microservices still give you flexibility, but you’re responsible for dealing with containers, clusters, and all that setup. Both help your app scale, but serverless cranks up the abstraction, reducing development cycle time since you’re not burning hours on infrastructure configurations.

Hexagonal Architecture: structuring enterprise software for adaptability

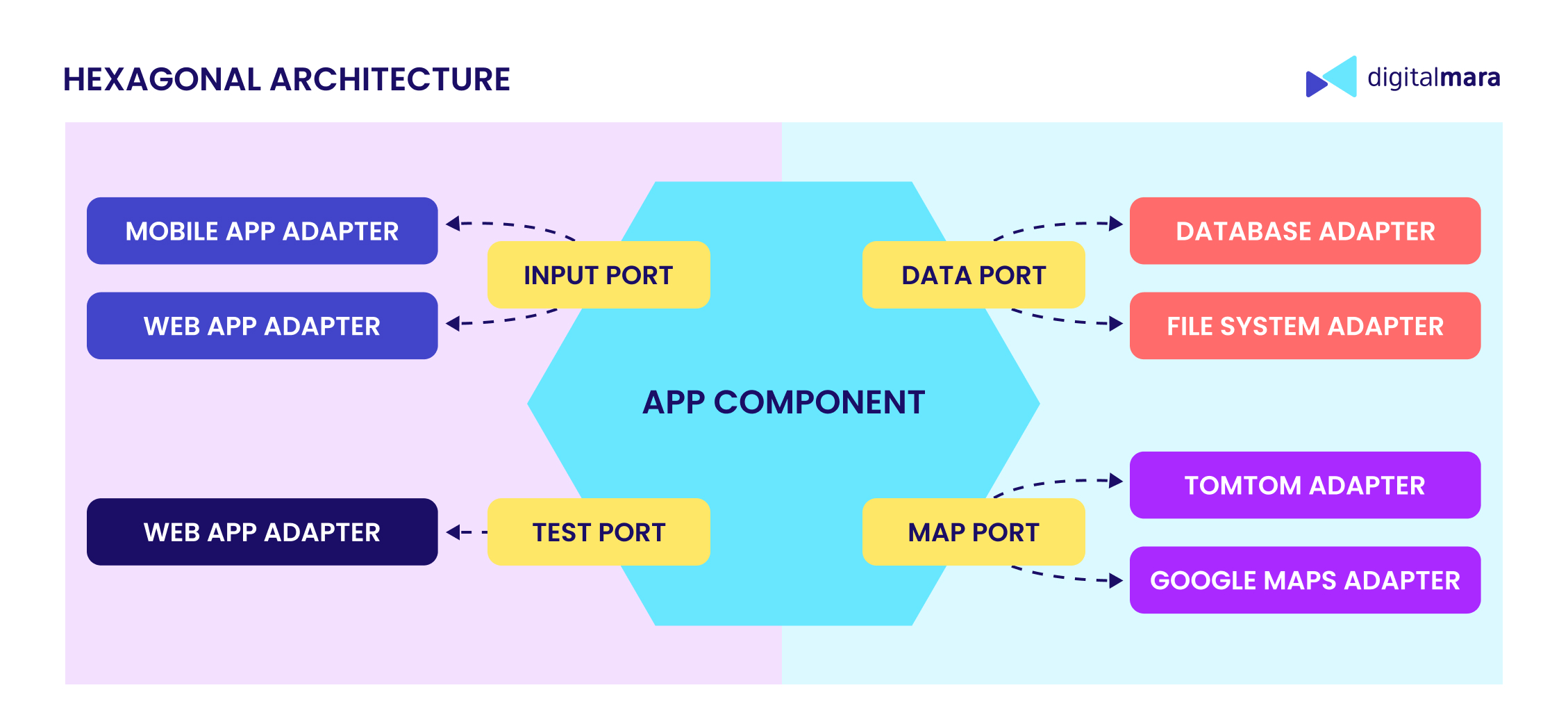

You might think hexagonal architecture relates to the number six, but it doesn’t. Its creator, Alistair Cockburn, chose the hexagon simply because it’s more flexible than boring old rectangles used for layered architectures. These extra sides symbolize adaptability, showing how the system smoothly interacts with its environment.

Today’s systems are constantly talking to one another, and your software needs to keep up as external services evolve. Hexagonal architecture’s ports and adapters act like shock absorbers. They shield your core logic from the chaos outside, making upgrades smoother and less risky:

- A port is an entry or exit point that is agnostic to specific consumers or implementations, often represented as an interface in code.

- An adapter is a layer that “adapts” one interface to another, essentially connecting the abstract port to other, usually third-party, software.

As shown in the diagram below, there are two types of adapters:

- Primary (driving) adapters: These sit on the left side, typically representing the UI or input mechanisms. They initiate interactions and depend on ports that trigger use cases within the application.

- Secondary (driven) adapters: Located on the right, these respond to events. They implement the ports and wrap external services like databases, APIs, or file systems.

This architecture delivers big wins for enterprise software:

- Flexibility — pick and choose service providers for different needs (like using Google Maps in the U.S. but TomTom in Europe).

- Lower maintenance costs — when third-party services crash, your system doesn’t even blink.

- Vendor freedom — swap out external services by just updating adapters, leaving your core logic untouched.

However, it’s not without its trade-offs:

- Strict separation of concerns can increase upfront development time.

- The additional layer of ports and adapters can complicate system deployment and operations.

Hexagonal Architecture is a strong choice for digital transformation initiatives, especially when modernizing legacy systems.

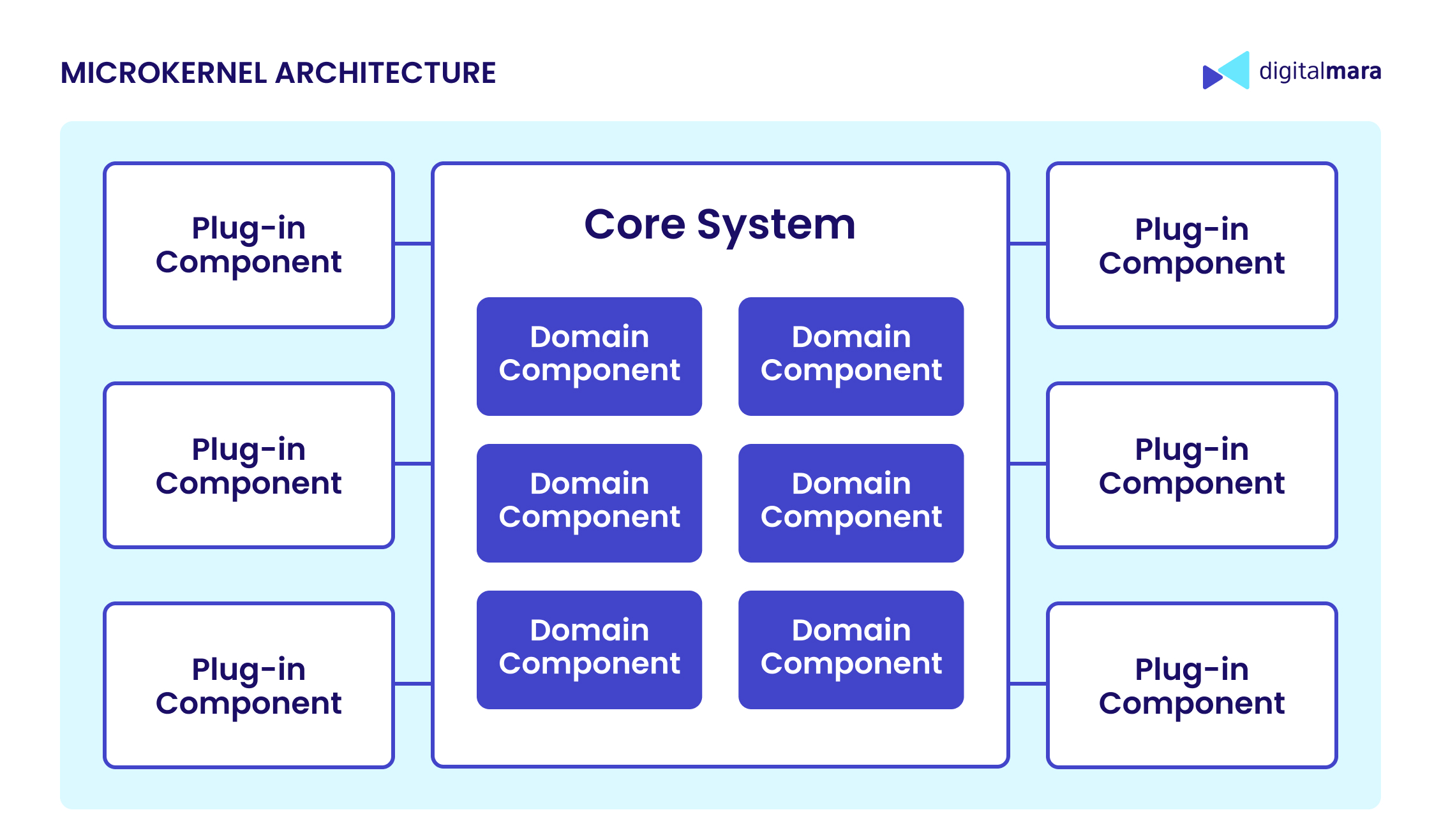

Microkernel Architecture: lightweight core with pluggable extensions

If software architecture styles were personalities, microkernel architecture would be that minimalist friend who keeps only the essentials, but still manages to deliver impressive results by calling in the right resources at the right time.

Much like hexagonal architecture, which emphasizes a clean separation between core logic and external dependencies, the microkernel pattern takes modularity to the next level. Originally developed for operating systems, where the kernel handles only the most basic tasks and delegates the rest, this approach is now widely adopted across various types of software architecture.

In software systems, the microkernel architecture is built around a compact and stable core responsible for essential tasks like coordination and plugin management, while all additional features are delivered through independent modules, or plugins. This design follows a modular structure where each plugin operates in isolation, without shared memory or direct communication with other modules. Everything goes through the core. By keeping the kernel clean and allowing features to plug in as needed, teams can extend or modify functionality without touching the base system, making it easier to scale, maintain, and adapt over time.

Among the main benefits, the following are worth highlighting:

- High flexibility and extensibility — It’s easy to add, swap, or remove features.

- Improved maintainability — Changes in one plugin don’t risk destabilizing others.

- Ideal for digital transformation — Legacy systems can adopt modern features as plugins.

- Scalable software design — The microkernel approach is perfect for systems that need to grow and evolve over time.

This approach does have an occasional fly in the ointment:

- Integration complexity — Ensuring plugins and the core system work together smoothly requires extra effort.

- Versioning issues — Independently evolving plugins can create compatibility challenges.

- Performance overhead — Communication through the kernel may introduce latency.

- Resource consumption — Modular designs often require more memory and processing power.

- Security — The core is a critical part of the system and must be well-secured. Any breach could compromise or bring down the entire system.

So, when used in the right context, this architecture truly shines. It’s especially valuable in systems that evolve incrementally, like IDEs, CMSs, or distributed platforms such as Apache Cassandra, which uses a microkernel-based approach to support flexible, scalable growth without constant rewrites.

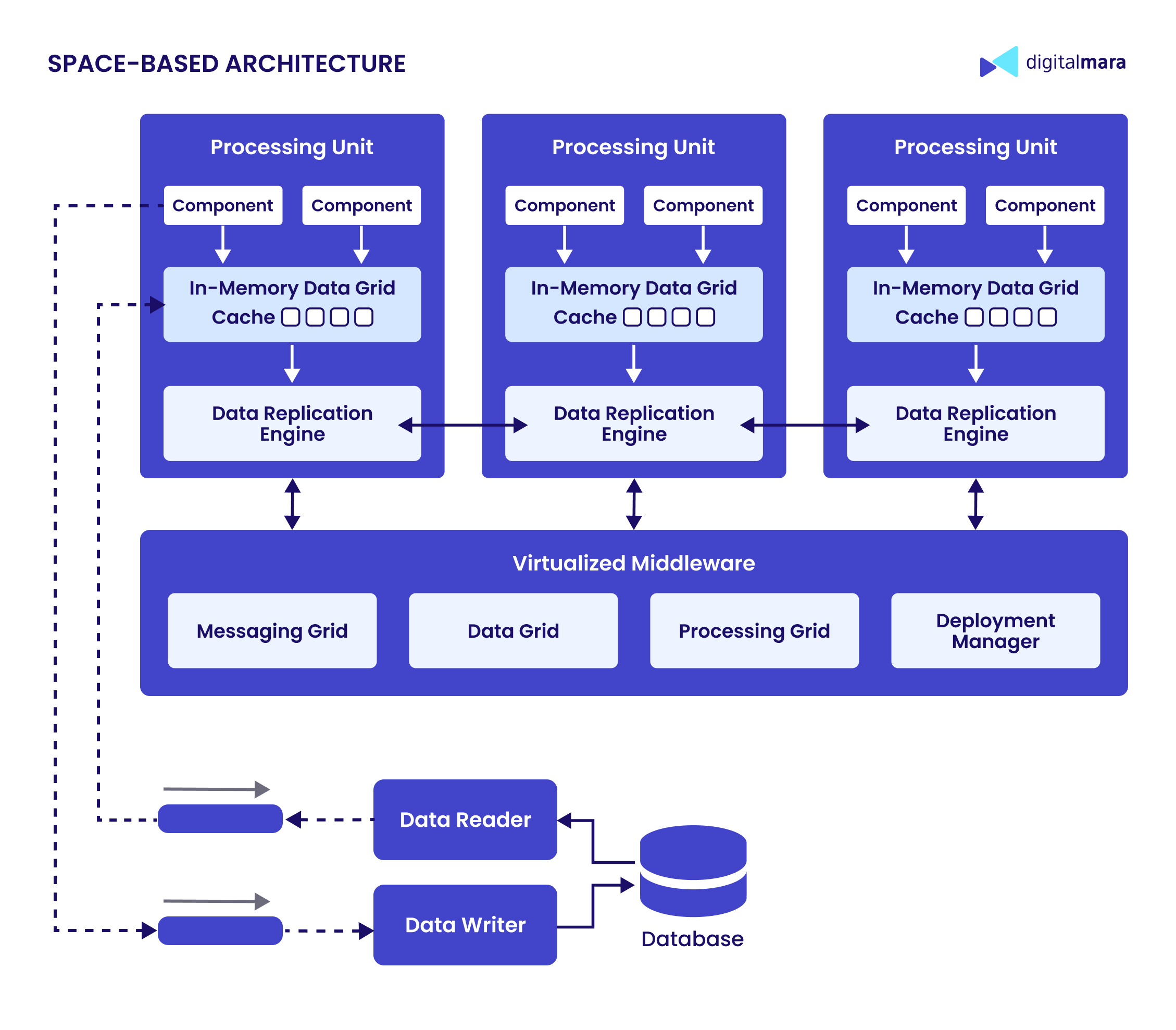

Space-Based Architecture: scaling cloud-native applications beyond traditional limits

Microservices and serverless are great for splitting systems into manageable chunks. But when you’re facing massive traffic spikes, space-based architecture (SBA) steals the show. Built for real-time apps and high-user platforms, SBA delivers instant scaling to handle crushing loads without breaking a sweat.

The concept behind SBA dates back to the 1980s, when David Gelernter introduced tuple spaces. The main idea? Don’t manage everything from a central point. Instead, spread data and tasks across a shared space where different parts of the system can work independently. Imagine a big crowd: instead of lining everyone up to do one task at a time, you hand out the work, and everyone gets busy at once. That’s the heart of SBA — breaking work into pieces and getting it done in parallel.

SBA works by spreading the software system across a data grid — the “space” — where independent processing units live. Each unit handles its own logic and keeps a local chunk of data in memory. This reduces load on a central database and delivers much faster response times. It fits perfectly with cloud-native environments, where stateless, elastic systems are the norm.

Why space-based architecture is awesome for enterprise software:

- Scales easily — need more power? Just add more nodes, no major changes required.

- Highly available — if one node fails, others pick up the load.

- Super fast — in-memory operations keep latency low.

- Cost-efficient — horizontal scaling drives cost optimization in software architecture.

However, some things can be rather tricky:

- Setup is complex — more effort is needed in comparison with traditional models.

- Data syncing — keeping data consistent across nodes can be a headache.

- Learning curve — teams need to understand distributed systems and memory management.

As AI-powered software gets more attention, SBA is a perfect fit: models for real-time fraud detection, recommendations, or personalization can run directly within processing units, cutting out the need for external calls. You can even spread AI features across the grid to handle big workloads more efficiently.

Some of the best-known real-world examples of SBA include trading platforms like Amazon and eBay, telecom systems like Verizon and Vodafone, and of course, online game backends, which use SBA to scale up real-time multiplayer interactions.

So, when you’re doing a software architecture evaluation for a high-load system, SBA is definitely worth considering. It fills the gaps where microservices and serverless might struggle, delivering speed, scalability, and resilience.

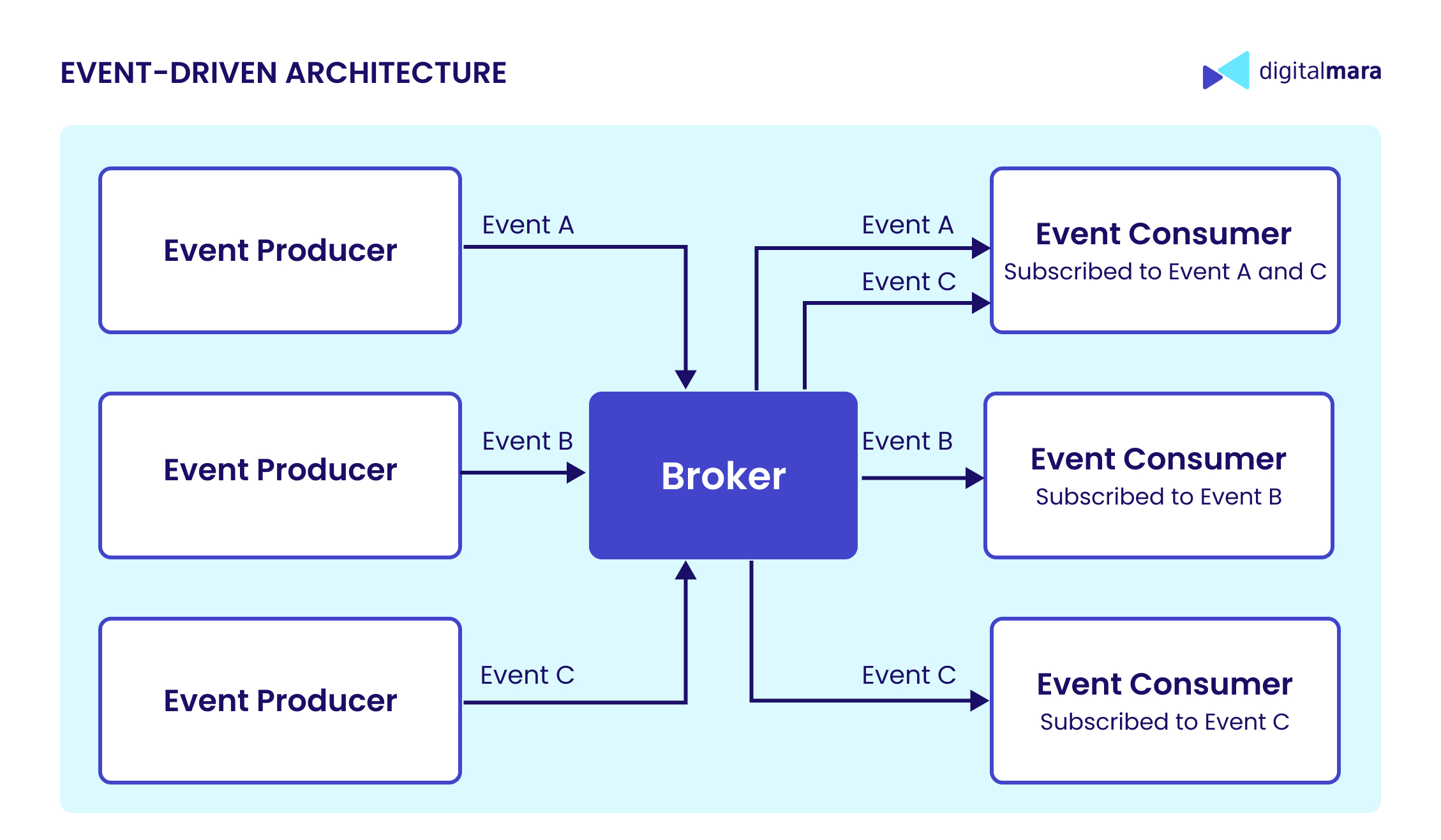

Event-Driven Architecture (EDA): a reactive backbone for modern systems

Event-driven architecture (EDA) is a modern approach that moves away from the traditional, tightly connected design. Instead, it uses asynchronous, loosely coupled communication between services. Unlike space-based architecture, which handles heavy traffic by spreading data across shared memory and replicated nodes, EDA takes a different route using events to keep systems flexible, reactive, and easier to adapt over time.

In an event-driven architecture setup, services don’t call each other directly. They publish events like “OrderPlaced” or “PaymentReceived” to an event bus or message broker. Other services can then subscribe to these events and respond as needed. This push-based model helps services scale and evolve independently, without being tightly linked.

Why EDA can be a strong choice:

- High decoupling — Services work on their own, which makes development and updates easier.

- Scalability and fault tolerance — Event queues can absorb traffic spikes, and if one service fails, others keep running.

- Easy to extend — You can plug in new services that listen to existing events without changing what’s already there.

- Agile and cost-effective — Event-driven microservices use resources only when needed, helping save on infrastructure costs.

But there are some catches:

- Eventual consistency — Since everything’s asynchronous, systems might not always be fully up-to-date right away.

- Harder to debug — It’s trickier to follow the path of an event through different services.

- Duplicate handling — System components might get the same event more than once, so you need logic to deal with that.

- Workflow orchestration — Coordinating events or sending results back to users can get complicated.

Popular examples of event-driven architecture include Netflix, which uses it to deliver real-time personalization and ensure a smooth user experience; Uber, which relies on event streams to process geolocation data, trip updates, and dynamic pricing; and LinkedIn, which built its Kafka-based system to power personalized newsfeeds and AI-driven recommendations.

As one of the core fundamentals of software architecture today, EDA helps build modular, responsive systems that are easy to scale and adapt. When combined with microservices, it speeds up feature delivery, reduces complexity, and keeps the architecture ready for future changes.

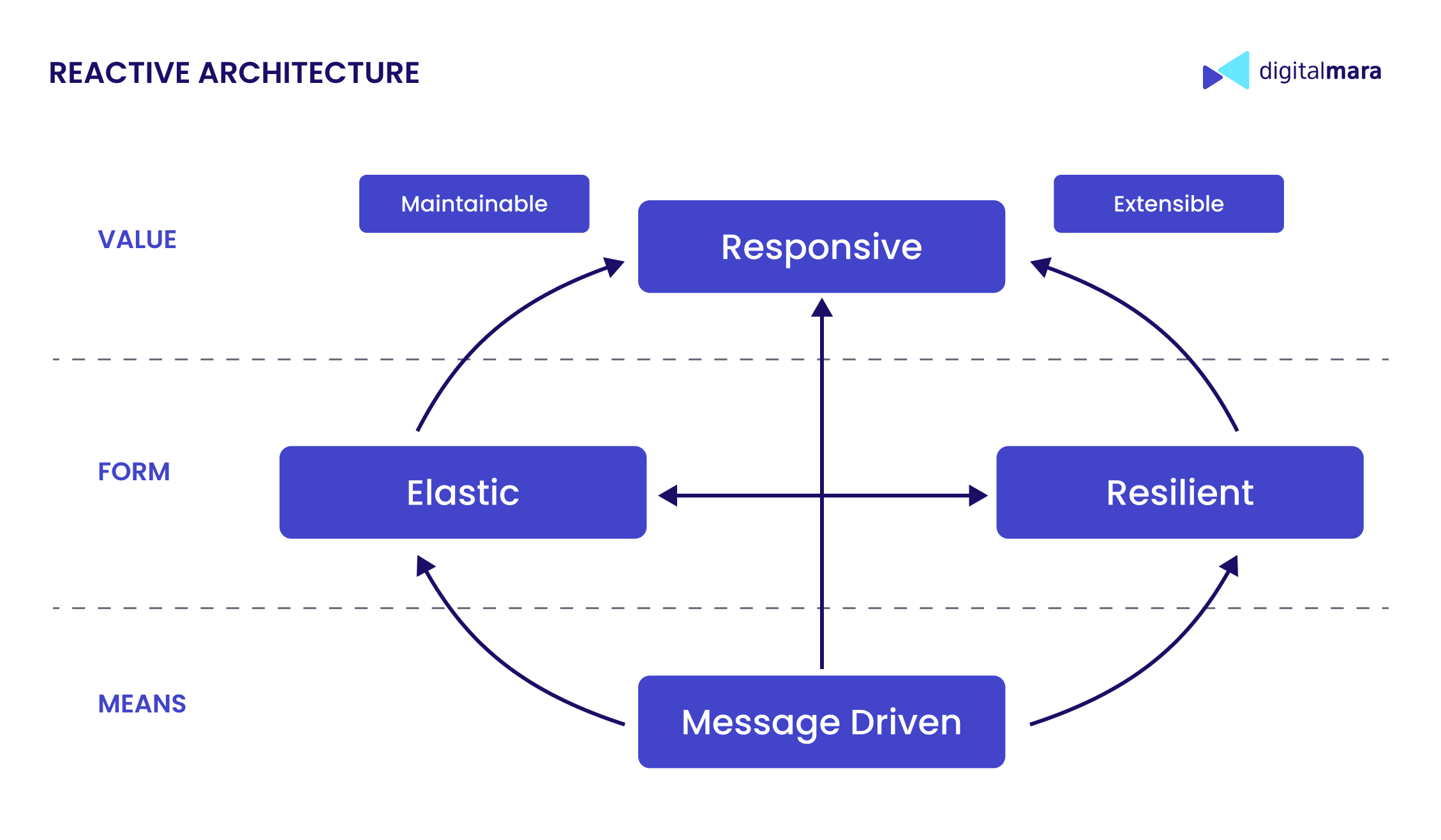

Reactive Architecture: a synthesis of modern principles

And at the top of our hit parade: reactive architecture, the most mysterious one.

As we’ve seen with different architectural approaches, modern systems need more than just modular design. When developing cloud-native, highly distributed, or IoT applications, developers face the challenge of unreliable hardware and networks. Reactive architecture directly tackles these issues by providing abstractions, programming models, protocols, and error-handling strategies designed to avoid the complexity and leaky infrastructures that often arise.

So what is reactive architecture? Frankly speaking, it isn’t really a strict term, it’s more of a philosophy, as defined by the Reactive Manifesto. This kind of approach focuses on building systems that stay responsive, no matter how conditions change.

The core principles of reactive architecture:

- Always answer — Even when things are breaking, keep responses quick.

- Expect messiness — Networks lag, data gets stale, stuff fails — plan for it.

- Failures happen — Build components that can pick themselves back up or provide degraded service.

- Stay independent — Let system parts do their own thing and chat async.

- Pick your consistency — Sometimes “good enough” now beats “perfect” later.

- Loosen time ties — Don’t make components wait for each other.

- Spread out — Run stuff in different places to stay available.

- Roll with the punches — Scale up/down automatically as load changes.

To put these principles into practice, you need tools like Lightbend’s Reactive Platform. This includes Akka, which handles asynchronous messaging, failure recovery, and elastic scaling right out of the box, and the Play Framework, which works seamlessly with Akka to build non-blocking, event-driven web apps.

Since reactive architecture aligns with SBA, EDA, and other approaches we’ve discussed, it incorporates both the benefits and challenges of the architectural styles mentioned earlier.

What’s the takeaway?

We’ve checked out some of the top architecture styles out there. Some play nicely together, while others might be total overkill for what you need. That’s why nailing the software architecture basics first is essential, it saves you from epic fails later. To make the best choice, it’s wise to rely on professionals. Custom software development teams can help you design the architecture or develop the entire solution.